Three key questions about complex systemsJonathan D. H. SmithDepartment of Mathematics Iowa State University Ames, IA 50011, U.S.A. E-mail: jdhsmith@math.iastate.edu Website: /jdhsmith.math.iastate.edu/index.html

IntroductionIn the course of studying complex systems, certain concepts feature prominently. Among these are the notions of hierarchical level, phase transition, and information. Unfortunately, considerable uncertainty still surrounds these concepts. In an attempt to reduce this uncertainty, the current paper intends to consider three key questions involving each of the concepts in turn. The questions are designed to provoke a discussion that may lead to some clarification of the concepts. What constitutes a hierarchical level?In discussions of complex systems, the concept of a hierarchical level is a theme that recurs constantly. Indeed, the presence of a hierarchy of levels is often used to characterise a system as being complex. Nevertheless, there are many different notions of hierarchical level, some more and some less well defined. What are the connections between them? Which roles can be played by each? These questions have been asked many times throughout the history of systems science. An early survey of possible answers was given by (Wilson 1969). Salthe initiated a classification of hierarchies with his distinction between specification and scalar hierarchies. For Salthe, this is the distinction between "levels of generality" (Salthe 1985: 166) and "matters of scale" (Salthe 1985: 47). However, it turns out that each of these classes is actually more ramified than is apparent from Salthe's discussion. While retaining Salthe's basic terminology, we will thus refine his classification, giving three examples of both specification and scalar hierarchies:

In this scheme, the distinction between specification and scalar hierarchies acquires a slightly different flavour. The specification hierarchies are formal, conceptual, or epistemological, while the scalar hierarchies are practical, real or ontological. Moreover, the distinction is not completely sharp. (Dare one say that it embodies the vagueness that Salthe appreciates?) For example, cognitive regularity definitely exhibits scalar features. Partial ordersPartial orders are the basic abstract structure describing hierarchical organisation. A partial order consists of a collection of elements and an order relation on these elements, describable as "domination." The order relation is required to satisfy three axioms:

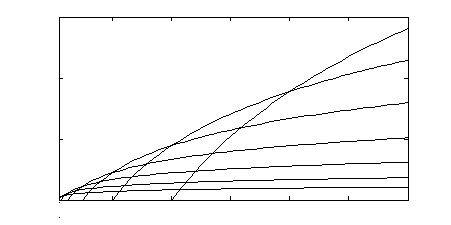

The language of partial orders is perfectly suited to the description of Salthe's specification hierarchies or levels of generality. For example, one may say that the physical level dominates the chemical level, which in turn dominates the biological level. On the other hand, the formal language also applies to the scales that are used to determine scalar hierarchies in Salthe's sense. For example, the scale of possible times may just be the set of positive numbers, with the usual total ordering. Bunge's concept of level (1979: 13) applies to the elements of one specific kind of partial order. Set theorySet theory starts by describing the world in terms of sets, collections of objects identified by properties they possess, or brought together merely by being listed together in a certain collection. The objects appearing in a particular set are called its elements or members. For example, one may consider the set of all positive integers, or the set consisting of the specific integers 5, 27, 33, and 100. A fundamental requirement of such a "crisp" set theory is that a given element either does or does not belong to a given set - there are no degrees of membership as in "fuzzy" set theory. The initial naive programme founders on Russell's Paradox involving the set A consisting of all sets which are not members of themselves. The paradox arises when trying to answer the question: Is the set A itself a member of A? If A is a member of the set A, then it shares the property identifying members of that set, implying the contradiction that A is not a member of A after all. On the other hand, if A is not a member of the set A, then it does not share the property defining members of that set, whence the contradiction that A is a member of A. The paradox is avoided by limiting the collections of objects that have the right to be called sets. On the one hand, various constructions (listed as axioms of set theory) enable one to put together certain collections as sets. For example, the "Power Set Axiom" says that the power set of a given set S, namely the collection of all its subsets, again qualifies as a set. On the other hand, the collection A appearing in Russell's Paradox does not qualify as a set, since it cannot be constructed according to the axioms of set theory. The resolution of Russell's Paradox exerts its price. The ban on the undesirable "set of sets which are not members of themselves" also excludes collections that mathematicians like to use, such as the collection of all sets. The problem is resolved by introducing a hierarchy of levels. At the bottom level are the "atomic" elements. The sets inhabit an intermediate level, while at a higher level one has classes. The collection of all sets is a class. The collection A of all sets which are not members of themselves is also a class. The troubling question of membership of A in itself does not arise, since the members of A are sets, while A itself is a class that is not a set. For a more detailed discussion, one may consult Chapter II and the Appendix of (Herrlich and Strecker 1973). There are other examples of hierarchies of sets of certain kinds. The formal mathematical treatment of probability through measure theory relies on the concept of a sigma-algebra of sets, which is a collection of subsets of a space closed under complementation, finite intersections, and countable unions (Royden 1963: 12). In a topological space, Borel sets are elements of the smallest sigma-algebra that contains the open sets. There is then a hierarchy of increasingly complex Borel sets. Each level of the hierarchy consists of those sets obtained as either countable unions or countable intersections of sets from the preceding level of the hierarchy. For instance, the set of points of continuity of a real function is a countable intersection of open sets, and thus lies one level up above the basic level of open sets (Royden 1963: 42). Formally similar hierarchies appear in complexity theory, although it is still uncertain if they do not in fact collapse to triviality. Cognitive regularityIn an important paper addressing the mind-body problem, Wimsatt (1976) proposed a definition of a hierarchical level which may be described as cognitive regularity. The underlying assumption is that a given complex system ranges over a scale of different parameter values, say of length or time. For each particular parameter value, one may envisage a detector tuned to view the system through a small window of adjacent parameter values. At some tuned values, the detected signal will be regular, while at other tuned values, the detected signal will be irregular. Then according to Wimsatt, the natural levels of the system correspond to those parameter values that yield a "local maximum of predictability and regularity" (Wimsatt 1976: 238). A limited but very concrete example is offered in North American markets by the portion of the electromagnetic spectrum from approximately 175 to 220 MHz. The levels of this system according to Wimsatt's definition are the VHF television channels from 7 to 13. A receiver tuned to one of these channels will detect a regular and all too predictable signal, while tuning away from these channels produces nothing but noise. Since the levels of the hierarchy are not related, the underlying partial order is an antichain. Wimsatt's definition becomes harder to apply in deeper, more serious examples. In general, it may be hard to find a suitable parametrisation of the system. Even measuring "predictability and regularity" in an appropriate way is a major challenge to information theory. Nevertheless, Wimsatt does offer the observation that "under the pressure of selection, organisms are excellent detectors of regularity and predictability." In an intriguing footnote (Wimsatt 1976: 238), he points out that predators seek to regularise their relations with their prey, while the prey try to irregularise their relations with their predators. The randomised path of a rabbit escaping from a cat is a good illustration of the latter, as indeed is much "Protean behaviour" in the sense of (Driver and Humphries 1988). Returning to physical examples, Wimsatt suggests that "the most probable states of matter" determine "levels of organisation and foci of regularity" (Wimsatt 1976: 239). Unfortunately, this determination runs the risk of becoming circular. The problem arises when one tries to establish probabilities. The most probable states are those that maximise the entropy (Smith 2001), but applicable definitions of probability or entropy seem to require a specification of the underlying levels of a hierarchical structure to begin with. ModularityThe term modular is used to denote linearly ordered scalar hierarchies in which the characteristic scale of each successive level of the hierarchy is around one order of magnitude larger than the characteristic scale of the preceding level. The most basic examples are provided by number systems such as the decimal, with its successive units, tens, hundreds, thousands, etc. places. The ratio relating a pair of successive levels is called the modulus. The ratio need not necessarily be constant, as in older measurement systems (72 points form one inch, 12 inches form one foot, 3 feet form one yard, 22 yards form one chain, 10 chains form one furlong, 8 furlongs form one mile, 7 miles form one league). Modular hierarchies arise from the instance of an approximately linear relationship between the entropy and the number of equiprobable states of a system. Without any hierarchical organisation, the entropy of a collection of n equiprobable states is proportional to the logarithm of n. Introducing a modular hierarchy, the entropy becomes the approximately linear envelope or maximum of a series of logarithmic curves.

A classic example due to Caianello is the problem of carrying cash in order to cover everyday payments uniformly distributed over the range from 25 cents to 250 dollars, under the usual system in which vendors give change. The problem is solved by carrying some coins and some $1, $5, $10, $20, $50, and $100 bills. The coins and notes form a modular hierarchy with moduli in the range from 2 to 5. A further example is provided by the alphabetic system of writing: about 20 to 40 different letters, up to 30 letters to a word, so many words to a sentence, sentences to a paragraph, paragraphs to a section, sections to a chapter, chapters to a volume. Military organisations - so many soldiers to a platoon, so many platoons to a batallion, so many batallions to a regiment, so many regiments to a division - also form modular hierachies. Simon's (1962) famous watchmaker Hora, assembling watches from modules themselves composed of submodules, is exploiting the structure of a modular hierarchy (with a reinforced meaning of the term "modular"). Physical hierarchiesOne of the simplest and best-known physical systems complex enough to exhibit the spontaneous formation of ordered structures is the Rayleigh-Bénard convection cell experiment, in which liquid in an open container is heated steadily from below. At low rates of heating, conduction or disordered convection currents carry the heat up to the surface. Above a certain critical rate. these convection currents organise themselves into hexagonal cells. There is a two-stage hierarchical structure underlying the experiment, governed by two characteristic time rates that are proportional to each other, with a ratio of about 1010. The lower level is represented by the thermal motion of the liquid molecules. Its characteristic time is the mean time t between intermolecular collisions, of the order of 10-10 seconds (Rumer and Ryvkin 1980: §87). The higher level is represented by the motion of the convection currents around the container. Its characteristic time is of the order of seconds. The Rayleigh-Bénard convection cell experiment is prototypical for a broad class of hierarchical systems. Their levels operate at time scales which are all proportional to each other, but such that the rates of proportionality are orders of magnitude larger than the moduli of modular hierarchies. A thorough treatment of systems of this kind is given in (Auger 1989). The time scales of the various levels are all ultimately measurable in terms of what one might call calendar time, the classical time that is implicit in Newton's "dot" notation for time derivatives (cf. Smith 2000). Under the somewhat tentative hypothesis that this temporal commonality does not carry over to truly biological sytems, it will be convenient to describe systems of the present type as physical hierarchies. Biological hierarchiesIn contrast with the modular and physical hierarchies described above, the term biological hierarchy is used to denote those systems that are complex enough to comprise subsystems whose intrinsic time scales are not proportional to each other. It is clear that the human brain falls into this category, since perceived time does not bear a constant relationship to calendar time (cf. e.g. Gruber, Wagner and Block 2000). One concept of organic time, not proportional to calendar time, was studied quite extensively in (Backman 1940). A mathematically accessible example of a biological hierarchy appears in a statistical-mechanical analysis of the intensive demographic variables describing the female population of a given country (Smith 2000: §§6-11). Newtonian or calendar time governs the basic process of population growth at the national level. On the other hand, an individual mother's demographic effect on the system is expressed through the net maternity function. This function specifies the probability that a new-born female will survive and enter a particular age class, multiplied by the average number of daughters likely to be borne by her while she sojourns in that age class. The function is peculiarly skewed when viewed using Newtonian time as the independent variable, but it takes on the familiar bell-shaped curve of a normal distribution when referred to the logarithm of the mother's age. In other words, it transpires that the logarithm of a mother's age is the natural, intrinsic time scale at the individual level, while calendar time is the intrinsic time scale at the population level. The two times are certainly not proportional to each other. Early in life, the individual's time changes rapidly with respect to calendar time, whereas later the rate of change decreases. Current investigations are suggesting that a similar internal logarithmic time governs part of the seed germination process in foxtail weeds (Setaria). This process, the result of adaptation to widely fluctuating climatic and other conditions, appears to be rather complex, and is not yet well understood (Dekker, Dekker, Hilhorst and Karssen 1996). One rather dramatic interpretation of the process is that the foxtails, extremely limited in their ability to move through space, do possess the ability to move forward in time, their seeds lying dormant until the onset of favourable conditions. How do phase transitions arise?Phase transitions are one of the chief manifestations of complexity at the level of physics. They are generally described phenomenologically by a discontinuous change of output resulting from a continuous change of input. For example, at atmospheric pressure, the macroscopic physical properties of H2O change dramatically as the temperature increases gradually from negative to positive Celsius values. However, it seems that there is no entirely satisfactory account of phase transitions. Rumer and Ryvkin (1980, §80) summarise the classical approximate theories as follows: We considered some approximate theories permitting the construction of thermodynamics in the vicinity of the phase-transition points: the van der Waals theory, the Bragg-Williams approximation, the Landau theory, all said to be classical. All these theories lead to results disagreeing greatly with experimental results. . . . These contradictions are indicative of the fact that the classical theories are invalid exactly in the region for which they were elaborated.They then go on to introduce exact theories: It is, therefore, expedient to consider some models admitting exact solutions, i.e. such models for which the partition function . . . can be found without any approximation.Generally speaking, models of this type are lattice models, involving copies of a very simple system placed at each point of intersection of a grid (or lattice). For example, the Ising model (Rumer and Ryvkin 1980: 428) uses a rectangular grid, and the simple system placed at each point of intersection has just two states, conventionally described as "spin up" and "spin down." For relative ease of computation, the only internal interactions that are normally assumed to take place are between adjacent points on the grid (although more complicated cases have been considered). A phase transition is exhibited at a critical positive absolute temperature if ordered arrangements are likely below that temperature. (Above the critical temperature thermal disorder, with random arrangements of spins, is the only likely possibility.) Unfortunately, the only way that these models can exhibit phase transitions is by taking an infinite number of points, characterised by phrases such as "passage to the thermodynamic limit" (Israel 1979: 4). Thus the models are of little help in clarifying why large but finite physical systems, such as a pond in winter, do achieve phase transitions. For a valid explanation, one needs more than the vague hope that large finite systems are "a little bit infinite". Is there any way to obtain a realistic and exact theory of phase transitions? Certainly the information-theoretic technique of entropy maximisation is capable of producing soundly based and realistic accounts of natural systems with a minimum of computational effort, contrasting very favourably with the painful bottom-up analyses of traditional statistical mechanics (Jaynes 1963). On the other hand, discontinuous behaviour arises in optimisation theory when constraints take the form of inequalities. The behaviour may change discontinuously as an inequality constraint changes from ineffective (strict inequality) to binding (exact equality). One is thus led to examine the construction of entropy maximisation models of physical systems in which inequality constraints (other than those defining the usual simplex of probabilities) arise naturally. What is the relationship between invariance and information?Following the work of Noether and many others, the relationship between symmetry and invariance is generally well understood. In classical mechanics, for example, rotational symmetry corresponds to the invariance of angular momentum. The relationship between entropy and information, although deeper and less completely clarified, has also been explored quite extensively. Subsuming these considerations, attention now shifts to the connections between symmetry/invariance on the one hand, and entropy/information on the other. Elitzur has related the significance of a piece of information to its invariance properties. Collier and Burch (2001) point out the way in which symmetry serves to reduce information content. Lin writes that [t]he maximum entropy of any system . . . corresponds to . . . total loss of information, to perfect symmetry or highest symmetry, and to the highest simplicity(1996: 367). Indeed, he goes so far as to speculate that, perhaps without exception, for any process, the system will evolve spontaneously toward the highest static or dynamic symmetry(1996: 374). Contributions such as these give some hint of the importance of the question, and of the various problems it raises. One of the major difficulties is that the symmetry of higher-level systems is rarely exact. Unfortunately, there does not yet seem to be a good general mathematical theory of approximate symmetry capable of mirroring the success of group theory in dealing with exact symmetry. As (Rosen 1995: 127) states: Since the general theory of approximate symmetry is not very well developed[,] I do not think it worthwhile to go into many details. It suffices to state that it is possible to define approximate symmetry groups for state spaces equipped with metrics, and it is possible to define a measure of goodness of approximation for each approximate symmetry group.Rosen here uses the term "metric" to denote what mathematicians usually call a pseudometric, i.e. he allows distinct points of the state space to have zero distance between them. He gives no reference for his definitions of "approximate symmetry group" or "goodness of approximation." Currently, it appears that music is one of the few fields that has managed to cope satisfactorily with approximate symmetries: Now let us turn to the piano and, beginning at bottom A, play upwards from it, first twelve successive fifths, and then seven successive octaves. We shall arrive each time at the same note, the top A of the keyboard. Twelve fifths have now been fitted into seven octaves. Yet the Greeks discovered, . . . two thousand five hundred years ago, that twelve perfect fifths would exceed seven octaves by a small interval known as the comma of Pythagoras.(Lloyd and Boyle 1978: 9). [As a multiplicative factor of frequencies, the comma of Pythagoras is (3/2)12·2-7 or about 1.0136.] A thought-experimentIn order to consider the relationship between symmetry/invariance and entropy/information, it is useful to perform the following thought-experiment: A brand-new steel ball bearing is kept in the damp, salty air of a seaside location, and gradually allowed to rust. The system may be analysed as a three-stage physical hierarchy in the sense considered above. The molecular level is at the bottom, with its characteristic time of about 10-10 seconds. The middle level has a time scale of seconds. At the top is the long-term level, with a time scale of months or 107 seconds. In accordance with Collier and Burch's claim that symmetry reduces information content, the initial mesoscopic description is very short: a spherical ball of 10mm radius, consisting of a certain grade of steel hardened according to a certain process. It is the spherical symmetry of the ball that enables this short description to be given. (One might also cast the symmetry as the invariance of the measurements obtained as the ball is gradually reoriented, on the time scale of seconds, within a micrometer.) As time progresses, the spherical symmetry of the ball is "broken spontaneously" (in the physicists' jargon). The ball starts to pit in certain places, losing its mesoscopic spherical symmetry. An adequate mesoscopic description of the ball becomes longer, as the location and geometry of the pits have to be specified. It is instructive to observe how the progressive loss of mesoscopic symmetry is accompanied by an increase in mesoscopic entropy. The system does not appear to perform according to Lin's speculation. Indeed, it is evolving away from the highly symmetric initial state. It appears that Lin's conjecture can hold at best only for simple systems devoid of hierarchical structure. What other lessons can be learned from the thought experiment? Certainly the initial spherical symmetry of the ball is only approximate. In fact, one gains a hint as to how one might deal with approximate symmetry by treating it as a concept pertaining to hierarchical systems, namely as exact symmetry holding at one hierarchical level. The initial approximate spherical symmetry of the ball is exact spherical symmetry holding at the mesoscopic level. The spherical symmetry of the ball does not hold at the molecular level, where the ball appears as an agglomeration of iron, carbon, manganese, and other atoms. Nor does it hold at the long-term level, since there the ball is more or less pitted, gradually becoming a jagged pile of iron oxide. If one were to take various micrometer readings over the long-term time scale, one would not observe complete invariance. In the thought-experiment, the mesoscopic symmetry-breaking may be construed as an amplification of inhomogeneity from the microscopic to the mesoscopic level. Layzer (1964) suggested a similar mechanism to account for the breaking of approximate symmetry at the gravitational level in the early universe. The gravitational asymmetries present at that time were not by themselves sufficient to account for the formation of stars and galaxies within the current age of the universe. Instead, Layzer proposed that initial short-range, low-level, electrostatic inhomogeneities (repulsive forces between protons and electrons) made their way up to the gravitational level. Conclusion

|